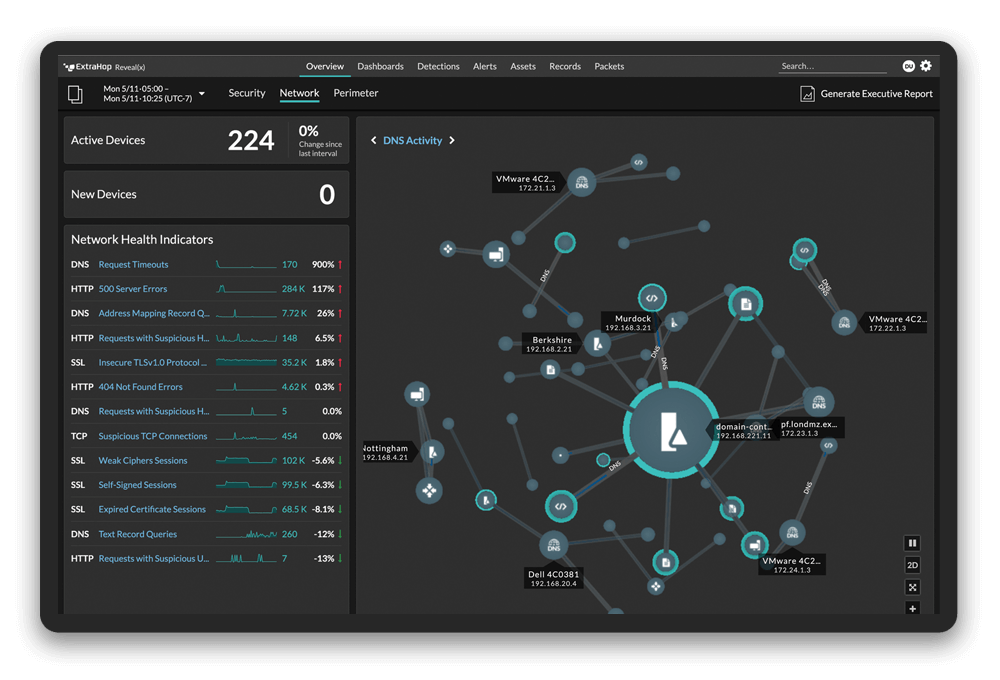

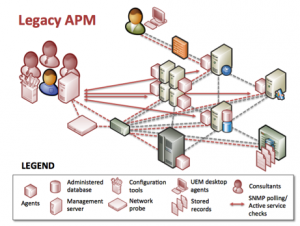

While it's true that application performance monitoring (APM) is only as good as its weakest link, conventional wisdom, as advocated by legacy application performance management vendors, suggests a "more is better" philosophy. Add more agents, collect more packets, run more synthetic transactions. In our discussions with customers, we've found that this quest to keep bolting on more and more monitoring solutions to more and more elements within the data center often results in a monitoring infrastructure that is more complicated than the applications themselves! This burden imposed by legacy monitoring solutions leads to incredible inefficiency and excessive cost.

The complexity in legacy APM results from a flawed bottom-up model for monitoring applications. This model suggests that if you monitor every single element independently you are therefore monitoring your entire  environment. This logic is valid only if the complex interdependencies among elements are also monitored to infer how each element affects overall transaction flow, which is a nearly impossible task using traditional approaches. With the massive scale of today's enterprise datacenters, the complexity of correlating an anomaly within a single system to actual end-user performance is extremely difficult, if not impossible. By itself, a metric such as high processor utilization on a single server has no context and is difficult to isolate among the thousands of similar alerts that fire on an hourly basis. Legacy APM vendors attempt to solve this issue by writing more and more complicated algorithms within their correlation engines, resulting in teams of consultants deploying APM solutions in a never-ending attempt to map problems back to specific system-level metrics. This bottom-up attempt to collect more data from more systems distributed across more datacenters and even throughout the cloud is not viable and inevitably leads to a monitoring solution that is more complicated than the applications it is intended to manage.

environment. This logic is valid only if the complex interdependencies among elements are also monitored to infer how each element affects overall transaction flow, which is a nearly impossible task using traditional approaches. With the massive scale of today's enterprise datacenters, the complexity of correlating an anomaly within a single system to actual end-user performance is extremely difficult, if not impossible. By itself, a metric such as high processor utilization on a single server has no context and is difficult to isolate among the thousands of similar alerts that fire on an hourly basis. Legacy APM vendors attempt to solve this issue by writing more and more complicated algorithms within their correlation engines, resulting in teams of consultants deploying APM solutions in a never-ending attempt to map problems back to specific system-level metrics. This bottom-up attempt to collect more data from more systems distributed across more datacenters and even throughout the cloud is not viable and inevitably leads to a monitoring solution that is more complicated than the applications it is intended to manage.

Sadly, there's a reason legacy application performance monitoring vendors hesitate to solve this problem: their dependency on consulting revenue. Even if a legacy APM vendor succeeded in developing a simple and elegant solution that passively analyzed every transaction across every tier—thereby obviating the need for millions of dollars in consultant-driven customized correlation engines—would they release it? Probably not, as it would break their revenue model.

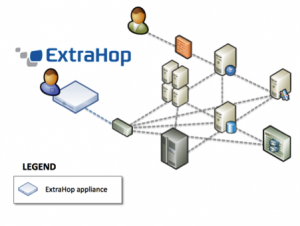

At ExtraHop, we believe it's time to kill your agents and stop the arms race costing you millions of dollars and years of consulting effort to implement an effective APM platform. ExtraHop Networks is the first vendor to  release a product based on a top-down approach, leveraging the data that is already flowing among application components over the network. We do not believe that you can solve what is fundamentally a signal-to-noise ratio problem by making more noise. The information required to analyze application performance is already available on the wire. What's needed is the ability to analyze the performance of highly interdependent and virtualized applications from a macro-level and distill the information so it is usable across the IT organization. An end user looks at an application as an integrated whole that either works or is broken.

release a product based on a top-down approach, leveraging the data that is already flowing among application components over the network. We do not believe that you can solve what is fundamentally a signal-to-noise ratio problem by making more noise. The information required to analyze application performance is already available on the wire. What's needed is the ability to analyze the performance of highly interdependent and virtualized applications from a macro-level and distill the information so it is usable across the IT organization. An end user looks at an application as an integrated whole that either works or is broken.

Application performance monitoring can be this simple, and the entire application and all of its elements can be monitored and managed from a simple yet powerful solution. It should not take 6 to 12 months and $10 to 20 million to implement an effective application performance monitoring platform. And with ExtraHop, it doesn't. Our solution installs in minutes and offers full visibility into production application performance within hours. Sound too good to be true? It probably does given the amount of marketing noise out there, but we would love to prove it. Give us a call or check out the ExtraHop demo to learn more.