ExtraHop Cloud-Scale ML is an industry leading architecture for delivering machine learning capabilities, including real-time threat detection, network performance analytics, and automated root cause analysis, by intelligently allocating ML workloads between on-prem network sensor appliances and cloud-hosted ML engines.

So how does it work?

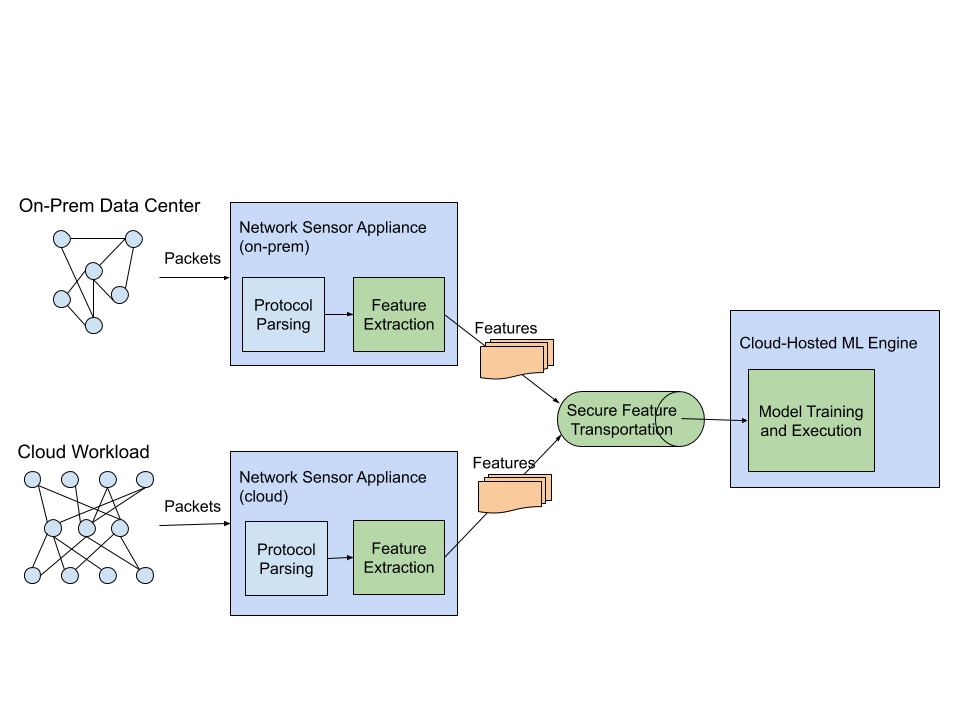

Simple. We start with network packets, extract features, securely transport those features to the cloud, train and execute ML models, and deliver highly accurate detections and insights to our customers:

Now, strap in—it's time for a lot more detail.

Why the "Cloud" in "Cloud-Scale Machine Learning" Matters

In order to turn network packets into insights and detections using ML, the raw packets must first be converted into ML features (or metadata), which are later used as inputs to ML models. This conversion from raw packets to ML features is commonly referred to as feature extraction, and is typically very data-intensive due to the fact that most networks today are capable of moving several gigabytes of data every second.

Given the large volume of data, it is best to perform these data-intensive computations close to the data sources, such as directly in the on-prem network sensor appliances. In comparison, real-time detection and adaptive modeling of up to millions of entities on customers' networks requires continuously training and executing the ML models, which is very compute-intensive and typically requires a large amount of memory and CPU resources.

While other vendors attempt to squeeze these compute-intensive workloads into the on-prem network sensor appliances, ExtraHop offloads them to a dedicated cloud compute farm where nearly unlimited compute and storage power can be utilized. In addition to that, this architecture enables ExtraHop to provide analytics and detections across customers' entire hybrid environment, from on-prem data centers to workloads running in the public cloud. Thankfully, once the raw packets are converted into ML features, the volume of data is dramatically decreased, making them very suitable for transportation.

Conceptually, ExtraHop Cloud-Scale ML intelligently partitions every ML workload into 2 portions (data-intensive feature extraction and compute-intensive model training and execution) and optimally allocates them between on-prem network sensor appliances and cloud-hosted ML engines. By performing data-intensive computations closest to the data source and offloading compute-intensive computations to the cloud, the architecture enables ExtraHop customers to benefit from the limitless computation resources in the cloud without incurring significant network or scalability overhead.

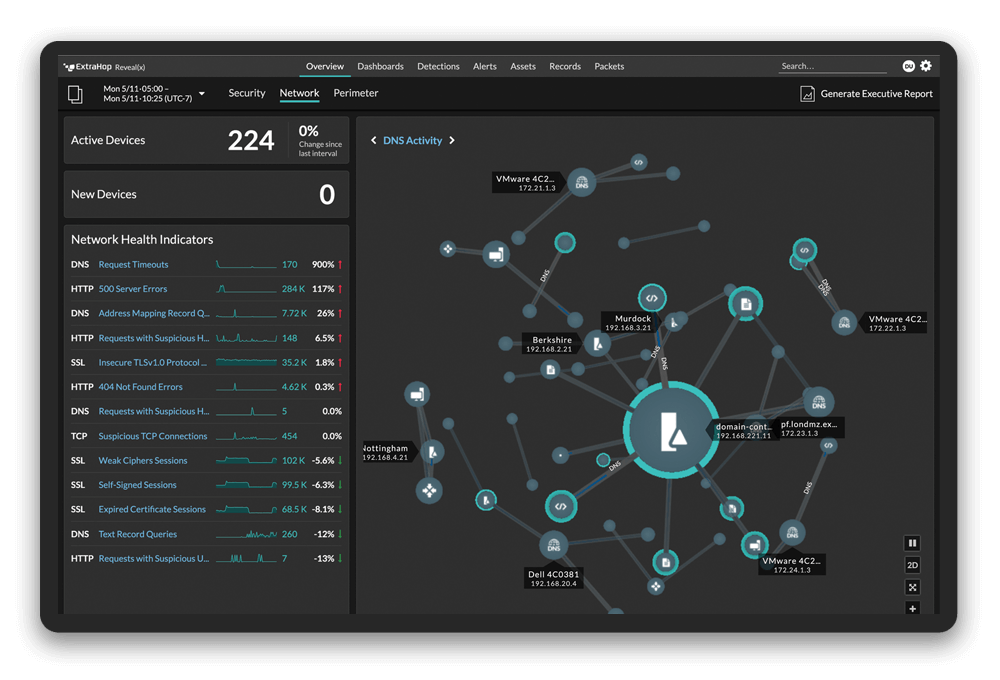

What Our Machine Learning Looks Like in Practice

ExtraHop Cloud-Scale ML consists of 3 key components: on-prem network sensor appliances, secure cloud link, and cloud-hosted ML engines.

On-prem (physical, virtual, or hosted) network sensor appliances are in charge of performing real-time L7 stream analysis and fine-grained feature extractions for all the ML capabilities. A typical network sensor appliance is capable of extracting four thousand features for every entity (such as IP address and user) on the network at up to a sustained 100Gbps of network traffic.

ExtraHop Cloud Services utilize a secure communication channel to enable cloud-hosted ML engines that request features from on-prem network sensor appliances in real time and send down the analytic results, including detections and insights. Customers' security and privacy has remained a top consideration for ExtraHop while developing this Cloud-Scale ML. As a result, multiple designs and mechanisms were put into place to ensure the confidentiality, integrity, and availability of customer data.

For example, ExtraHop utilizes extensive deidentification to make sure no PII data can be accessed by the cloud-hosted ML engines, while not negatively affecting the accuracy of detection and analysis. All client connections for the purposes of indexing or querying data are encrypted with mutual certificate authentication, and no sensitive data leaves customer control.

Cloud-hosted ML engines fetch features from on-prem network sensor appliances via secure cloud link, and perform a large variety of ML analytics ranging from real-time threat detection to network performance analytics. They typically consist of hundreds of smaller analytical modules utilizing a pool of purpose-built ML algorithms.

Benefits of Cloud-Based Machine Learning

Superior Detections and Analytics: As compute-intensive ML workloads are performed in the cloud, ExtraHop is able to dedicate 10x more computation resources to analyze the rich network data produced by on-prem network sensor appliances. This enables extra high fidelity modeling of every aspect of a customer's environment, and utilization of sophiscated ML algorithms that are simply unable to fit within a 1RU appliance.

For example, on average, Reveal(x) ML's threat detection engine builds hundreds of predictive models for every entity (such as IP address or user) observed on the network, covering all aspects of its behavior and interactions. That means over 1 million personalized ML models for a network with 10k devices and users. In addition, dozens of multi-million node graphs representing behavior patterns and correlations for customers' entire networks are computed and analyzed continuously in order to detect subtle suspicious behaviors and generate network-specific knowledge to enable more accurate analysis.

Industry-Leading Scalability: ExtraHop Cloud-Scale ML is one of the key pillars in ExtraHop's 5x scalability advantage over our competitors. By offloading compute-intensive ML workloads to the cloud, precious compute resources are freed up and repurposed in the on-prem network sensor appliances to enable processing of up to a sustained 100Gbps of network traffic while collecting thousands of features and metrics.

Global Coverage Across Your Network Boundaries: Compared to other solutions that run ML locally and independently on each network sensor appliance, ExtraHop Cloud-Scale ML enables global coverage of customers' environment across all network sensor appliances on-prem and in the cloud within a single instance of the ML engine.

This allows ExtraHop to provide more accurate analytics and detections based on the behavior of a given customer's entire hybrid environment, ranging from campus networks to private data centers to public cloud workloads, instead of individual network regions.

Rapid Security Updates: Today's security threats are evolving at a breakneck pace that the daily firmware updates and weekly model updates offered by traditional security vendors are no longer sufficient to protect businesses from the latest threats.

While our Threat Research team and Data Science team are constantly refining our threat detection modules based on the latest threats and model experiments, ExtraHop Cloud-Scale ML utilizes cloud-pushed live updates and continuous cloud-based ML engine deployments to ensure that customers are always getting the latest and greatest iteration of our ML analytics without any manual interaction.

So, there you have it—a top-down look at how ExtraHop delivers industry-leading, cloud-based machine learning for hybrid security and performance.

This is the first in an ongoing series of blogs detailing how our architecture works and why our ML is such a critical factor in our market-defining approach to network detection and response (NDR). Watch this space for the next addition!