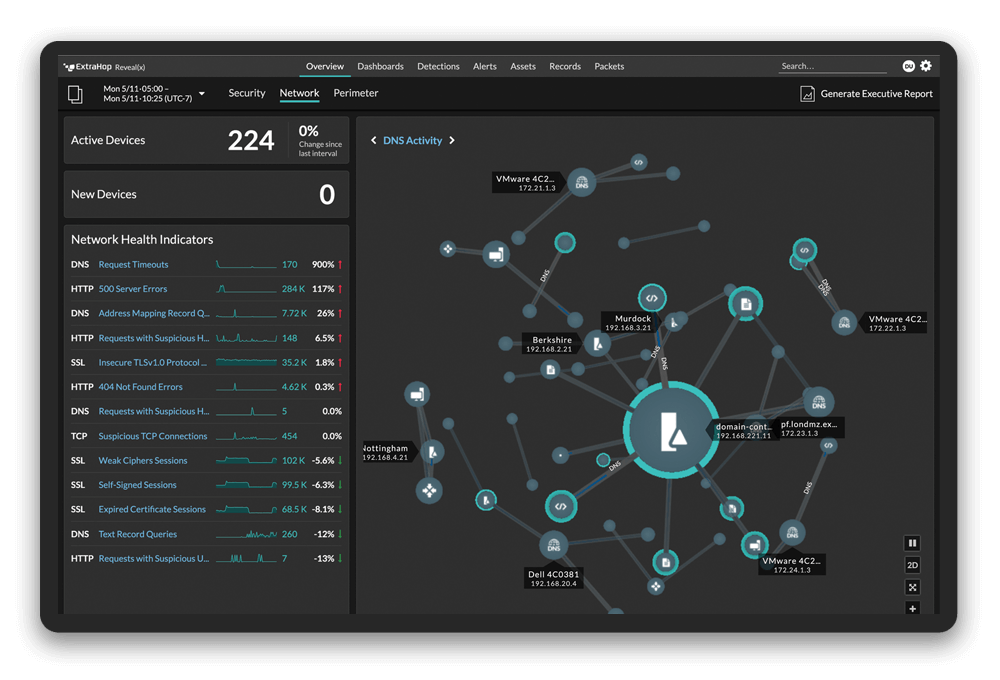

In the previous blog post, we went through the core machine learning (ML) components of our network detection and response product ExtraHop Reveal(x), ranging from inference to behavioral graph analytics to autonomous investigation. In this blog, we want to talk about the categories of ML algorithms and how we utilize them in different parts of the product with the ultimate goal of providing you with accurate detections.

Machine learning is the key that unlocks greater SOC efficiency by automating various manual tasks, ranging from attack detection to investigation. However, it is technically infeasible with current state-of-the-art technology to build a single ML algorithm that is capable of detecting every single attack technique, gathering all the relevant context and autonomously performing intelligent response all by itself. As a result, our approach to applying ML is to "divide and conquer."

More specifically, we break down the array of manual tasks in the detection, investigation, and response workflow into smaller functional components, and focus on automating each individual functional component with ML. This is why we currently have hundreds of detectors and a dozen different ML components working together in Reveal(x).

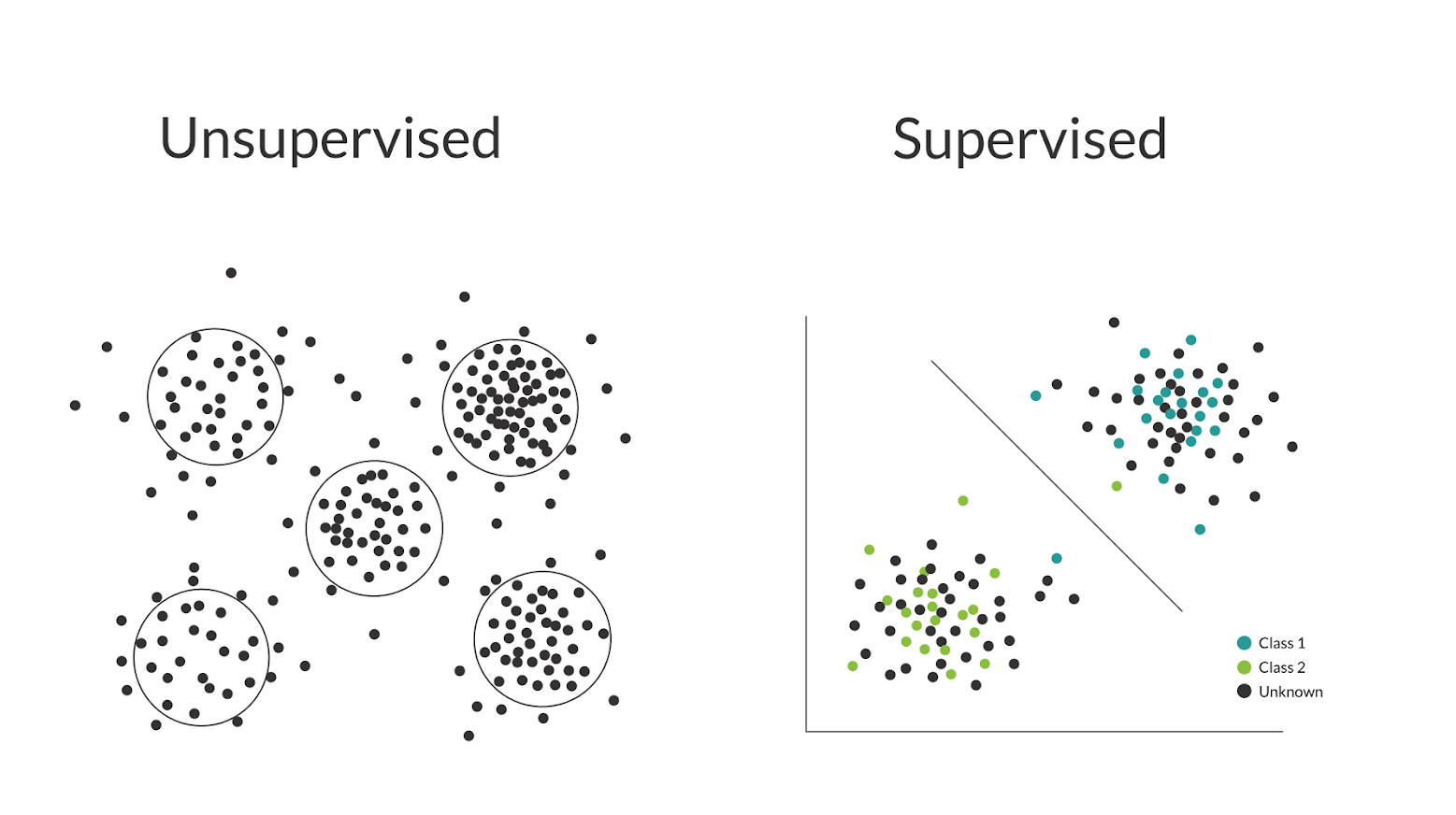

Before we dive into how we choose the category of algorithms (supervised and unsupervised) for each individual ML component and detector, let's first talk about the pros and cons of each. The primary difference between the two is their requirement for labeled data. A labeled dataset refers to a collection of observations or samples and the corresponding labels. For example, the label for an image containing a cat would be "cat" and the label for a DGA domain would be "malicious."

Supervised vs. Unsupervised Machine Learning

Supervised learning contains ML algorithms that need to be trained using labeled datasets. Image-based object recognition and classification typically utilize supervised learning algorithms where algorithms learn from millions of images and the corresponding labels. Supervised learning is very powerful at well defined tasks but can only be utilized when there exists a sufficiently comprehensive dataset.

For example, in order to train a very accurate "hot dog or not hot dog" image classifier, one needs to collect not only images of hot dogs but ideally every other type of object that might be confused for a hot dog. The task of collecting a comprehensive dataset containing samples of every foreseeable possibility is extremely challenging in cybersecurity due to behavioral varieties in networks and how networks are used by different organizations, applications, and users.

Unsupervised learning generally refers to algorithms that can "learn" from an entirely unlabeled dataset. It might seem strange that a category of algorithms can learn things from the void since there is no label or ground truth. However, there is still plenty of information inside the data: distribution, pattern, and even data itself can be marked as labels.

Unsupervised learning is widely used, such as clustering for market segmentation, and time-series analysis for stock market or supply chain demand prediction. Unsupervised learning algorithms usually work by making practical assumptions on the distribution of data in the dataset. For example, the algorithm might assume that if X is very common in the dataset, then X might have a higher probability to be part of normality. Unsupervised learning is very good at identifying patterns in the data, which in turn can be used to detect abnormalities or change in behavior without relying on any labels.

For this reason, unsupervised learning algorithms are popular among security vendors. They are also very adaptive, capable of molding to each customer's specific environments and security policies.

Unfortunately, a lot of vendors directly apply unsupervised learning on raw data (such as event logs), which often leads to excessive false positives and noisy alerts. The reason is that while the unsupervised learning algorithms are very good at identifying mathematical abnormalities, not all mathematical abnormalities actually correlate to meaningful detections and alerts. For example, if every Mac in the network suddenly starts to download large files from the Internet, that would be a mathematical anomaly caused by a new version of Mac OS being released and not a security concern.

A Unique Approach to Improve Accuracy of Unsupervised Learning

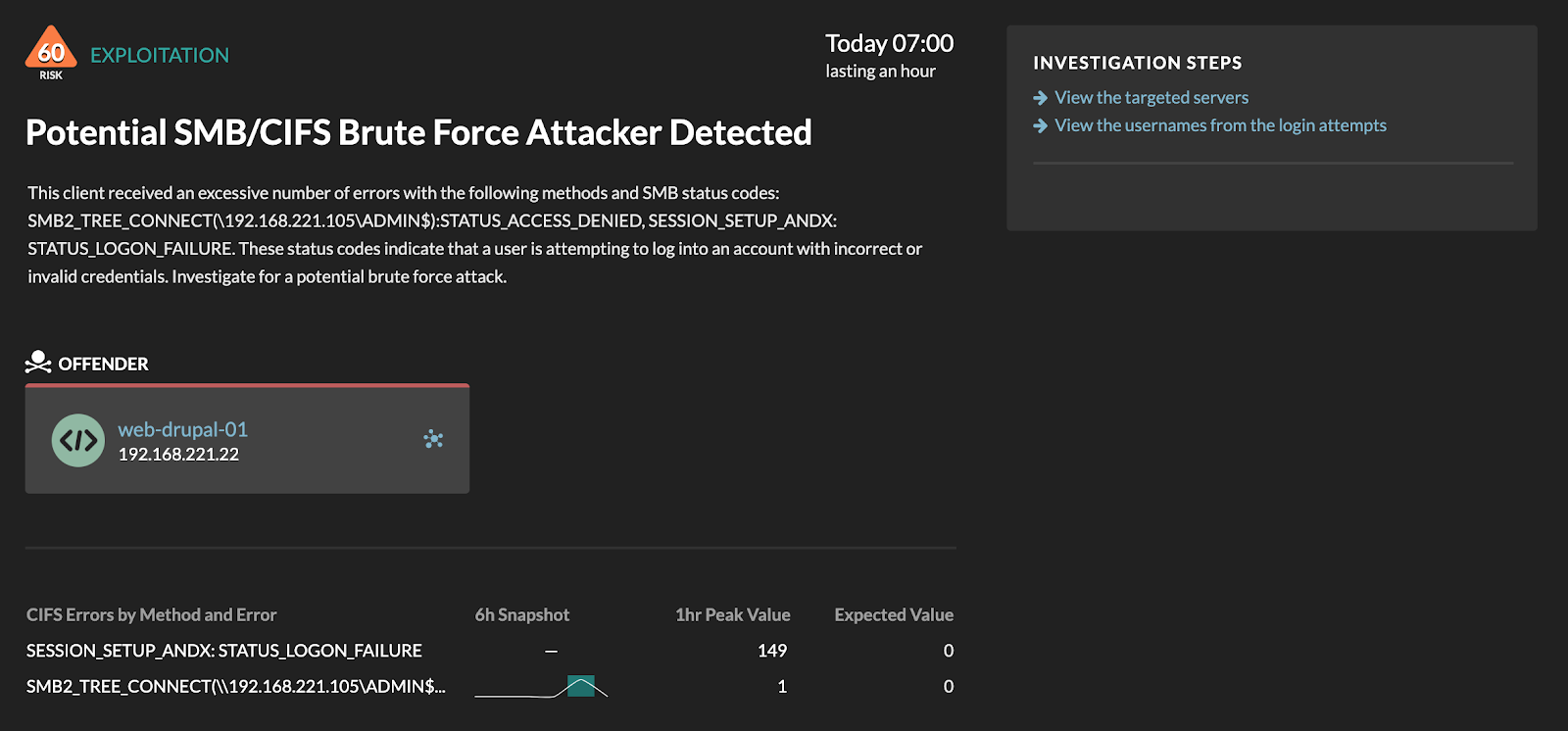

At ExtraHop, we combine the analytical power of unsupervised ML with security domain knowledge about how attack techniques manifest on the network, resulting in unsupervised ML-powered threat detection that produces significantly less false positives. More specifically, we utilize a sophisticated approach where we develop extensive feature engineering (leveraging industry-leading application-layer visibility), parameter tuning, and post-processing (based on our domain expertise in networking and security) to guide the unsupervised learning algorithms.

This approach allows us to make sure these detectors detect real attacks, not just mathematical anomalies. As part of our detection R&D process, every single detector is tested extensively against arrays of simulated and real attacks and benign traffic to validate its accuracy:

Reveal(x) has access to highly relevant application-layer features such as SMB/CIFS methods and errors.

Factors for Deciding Between the Two

When our data scientists are researching and developing the algorithms for a given specific component or detector, we look very closely at the task that needs to be automated and the labeled dataset we have to work with. The type of task is a very important factor because ones with universally accepted definitions are better suited for supervised learning as the model only needs to approximate one well-defined task, instead of hundreds of variants of the task based on preferences and situations of the specific customers.

In addition, the size and comprehensiveness of the labeled dataset is also critical to the accuracy of the ML component and detector. Too small or incomprehensive a dataset can cause the supervised learning to perform unpredictably in scenarios that are not part of the training dataset, leading to false positives and false negatives.

For example, supervised learning is well suited for DGA domain and beaconing detection, because the definition of DGA is very universal and we have access to large and comprehensive dataset containing millions of benign and malicious domain names. At Extrahop, we leverage a variety of supervised learning algorithms, from shallow classical algorithms to sophisticated deep neural networks, to accurately detect these well-known and universally understood suspicious behaviors.

In comparison, unsupervised learning is a better fit for behavioral detections, such as abnormal remote access or data movement, because the definition of abnormality varies greatly across different network environments and entities on the network. For example, a 200MB data transfer via SSH between two internal IPs might be considered benign for subnet A, but very suspicious for subnet B, depending on the properties and roles of the involved IPs. Unsupervised learning can be used to infer the organic architecture of different application tiers, organizations and device groups on the network, and surface localized anomalies where a device behaved suspiciously compared to its peers.

In this blog post, we covered the pros and cons of supervised and unsupervised learning for threat detection. Reveal(x) utilizes both categories across hundreds of detectors and a dozen ML components as part of our cloud-scale ML service. If you would like to see how these detections are presented in our user interface to speed SOC workflows, check out our fully interactive demo.