Change isn't coming—it's rapidly underway for many government agencies and public sector organizations. Driving that change is the ever-present threat of ransomware and recent cybersecurity initiatives that have bubbled up in response. The result is a long list of organizations taking stock of their incident response capabilities as they work to implement fresh zero trust mandates.

Cybersecurity modernization efforts ramped up this summer when the Biden Administration issued Executive Order 14028, Improving the Nation's Cybersecurity, which among other things, mandates the adoption of zero trust policies and establishes rules and systems for reporting cyber incidents and sharing relevant data. To provide further guidance, the White House also addressed a memorandum (M-21-31) to the heads of executive departments and agencies which outlines requirements and benchmarks for the executive order's data sharing mandates.

It All Goes Back to Zero Trust

Executive Order 14028 couples the adoption of zero trust frameworks with more advanced investigation, incident response, and information sharing requirements to help shut down emboldened cyber gangs and reduce overall risk. For many organizations, the assessment of their data retention capabilities and renewed efforts to close visibility gaps are crucial steps to threat detection and data collection initiatives associated with zero trust architectures. To achieve these goals, the newly outlined data retention requirements are a logical starting point.

The new government mandates mark a major shift away from prevention-based security postures, toward a more proactive, detection-based stance. Their benchmarks can and should be met in tandem as part of a broader effort to implement a modern security strategy capable of detecting today's advanced threats.

New Data Retention Requirements

In response to M-21-31, government agencies are assessing their data retention maturity to meet the first benchmark on October 26, after which they will begin working toward meeting the basic retention requirements for high-value assets by August 27, 2022, with additional maturity benchmarks mapped out for February and August, 2023. Web log data is central to the memorandum's outlined retention requirements, but in addition, agencies are being directed to provide packet capture information and network data.

The data collection standards outlined in M-21-31 are extensive and government agencies will need to react swiftly and deliberately in order to successfully implement them. Logging requirements include:

- HTTP URLs and corresponding status codes

- DNS queries and responses

- SSL connection types (ciphers, versions)

- DHCP requests (lease information)

- End user response time(s)

- File access (opens/reads/writes)

Adding to log data requirements, packet capture data for network infrastructures and cloud environments is deemed highly critical, with website application and network traffic data required in later benchmarks. Packet capture data is required to be held for seventy-two hours.

To meet the growing network visibility, agencies will need the ability to inspect encrypted data. The M-21-31 suggests the use of proxy servers to help identify malicious activity with and log data from incoming and outgoing traffic. Proxies are meant to decrypt and analyze incoming and outgoing traffic, which can help identify intrusions or exfiltrations—that is, the start and endpoints of an attack.

The memo directly links the inspection of encrypted data to zero trust architecture by adding that "agencies are expected to follow zero trust principles concerning least privilege and reduced attack surface, and relevant guidance from OMB and CISA relating to zero trust architecture."

There's a Better Way

Assessing Data Collection and Analysis Gaps

Log data has long been seen as the gold standard for data collection and retention. As M-21-31 says, "Information from logs on Federal information systems (for both on-premises systems and connections hosted by third parties, such as cloud services providers (CSPs)) is invaluable in the detection, investigation, and remediation of cyber threats."

Unfortunately, logs have a few setbacks that are hard to ignore. At their best, logs are extremely comprehensive—which can amount to finding a needle in a haystack in the aftermath of a stealthy breach. In some instances, the volume of data produced by logs is downright cumbersome to store and sift through (as with DNS traffic) or nearly impossible to scale (in the case of cloud workloads). At their worst, logs state the obvious: A log disabled or erased by an attacker tells you what you already knew—you've been breached—and nothing more.

Additionally, collecting logs in the traditional manner is difficult (often painful) to implement in practice. Typically, log collection involves installing additional agent software, which can add extensive overhead to server and network infrastructures. How many organizations can claim to have traditional logging deployed across 100% of their environments? Speaking from experience the vast majority of enterprises have visibility gaps in their SIEM/logging implementation.

For encrypted data, proxy servers are common tools for inspecting encrypted traffic, but they also leave critical visibility gaps by missing laterally moving network traffic. Zero trust concepts hinge upon an organization's ability to analyze and view encrypted network traffic to ensure that compromised credentials don't have free reign to escalate privileges. This calls for organizations to move beyond proxies and firewalls to look deeper into the network.

Contextual Data with Network Detection and Response

As organizations are wrapping up their initial assessment and implementing new data retention policies, it's worth considering how a good portion of the data outlined in M-21-31 can be easily extracted using a network detection and response (NDR) solution.

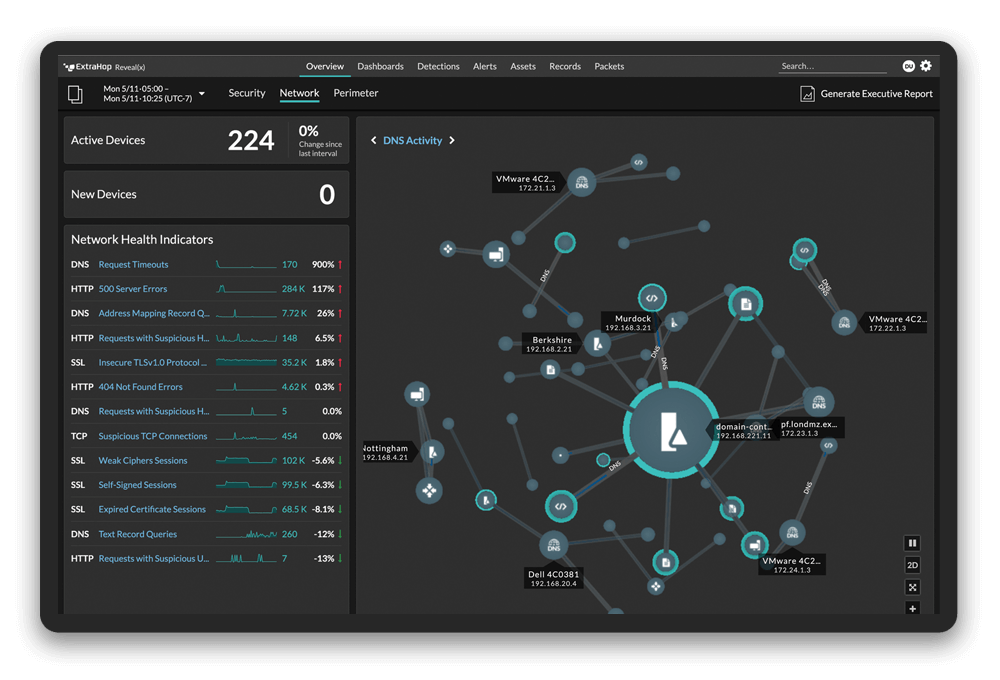

NDR offers behavior-based analytics with out-of-band decryption capabilities that can analyze all network traffic, right down to the packet level. ExtraHop Reveal(x) NDR includes lookback capabilities and out-of-band decryption, which can offer another option to capture the necessary metadata in a way that scales to meet the visibility and storage needs. Reveal(x) can easily monitor and store data for difficult-to-log sources such as DNS traffic and cloud environments. It can also do what proxies can't: Decrypt, inspect, and store laterally moving network traffic, right down to the packet level.

Reveal(x) can also help agencies simplify their data collection, storage and management processes by offering one tool that can seamlessly pull information from across the IT infrastructure, including physical, virtual and cloud environments. A more unified security solution that supports zero trust and data collection mandates allows agencies to cut streamline toolsets and create more efficient, effective workflows.

Accelerate the Pace of Change

M-21-31 is just part of a larger cybersecurity modernization campaign aimed at preventing infrastructure-threatening ransomware attacks across public and private sectors. Led by efforts by White House, the Department of Homeland Security (DHS), and CISA, government organizations are being held to high standards for both their own sake and to serve as a model for private sector organizations who can also benefit from zero trust frameworks.

New mandates for government agencies are just the start of a steady cybersecurity evolution that will likely affect every sector of our government and economy. Change isn't expected to be fast or swift, but incremental and deliberate. As CISA states in their zero trust security model guidance, "zero trust may require a change in an organization's philosophy and culture around cybersecurity. The path to zero trust is a journey that will take years to implement."

As organizations lay the groundwork, it's important to remember that a change in philosophy is something that no one technology solution can provide. Instead it demands the careful development and implementation of forward-thinking policies—but NDR technology that meets new visibility, threat detection, and forensic needs across an entire IT infrastructure can no doubt support and accelerate the pace of change.