Large language model (LLM) AIs like ChatGPT have captured the public’s attention in recent months, as users find creative new ways to use the technology.

It seems like LLMs, which can mimic human intelligence, are having a moment, but in reality, the moment will stretch into years as they keep getting smarter and more powerful.

The continual, rapid advancement of AI has generated a much-needed, and perhaps overdue, debate about the possible benefits and potential dangers of powerful AI models. AI has the promise to accomplish a bunch of great things, such as modeling ways to deal with climate change or finding cures for diseases like cancer.

However, many smart and informed people have also raised a number of serious concerns about AI, with some focused on the technology replacing jobs, distributing biased data, and otherwise contributing to a systemic erosion of trust by spreading disinformation and helping people create ever-more convincing deep fake videos or audio files, a topic I’ll address in a later blog post.

I understand these specific concerns about the future of AI, but on more of a 1,000-foot level, I’d suggest that we don’t really understand the scale and the scope of the problems related to LLMs.

Some U.S. politicians and AI experts are calling for regulation and recommending that the U.S. create an AI licensing or approval process.

It’s important that we’re now having a discussion about the potential downsides to ever-more powerful AI models being deployed, but we shouldn’t lose sight of the tremendous potential benefits of this technology. We also need to be aware of the context in which these discussions are taking place and the specialized knowledge required to understand AI.

One issue is that it will be difficult for members of the U.S. Congress to keep up with the technology and the nuances of the debate over AI, given the rapid pace of development. Most members of Congress aren’t technology experts, and the discussion over AI demands a level of knowledge that’s in short supply. We don’t have the people with the necessary skills to deal with something advancing so quickly.

Moreover, for Congress to draw up regulations, it will have to depend on the expertise of the people working in the AI industry, and that’s the classic fox-guarding-the-henhouse scenario.

Another issue is that in many ways, those advocating for regulations may be already playing catch-up. While companies like Microsoft, Google and ChatGPT creator OpenAI get a lot of the public attention, AI is advancing rapidly around the world, and there are dozens of large-language AI models being built as we speak, and some can be deployed on personal computers.

Take a look at the oddly named Hugging Face website: It currently has posted evaluations of effectiveness of more than 90 large-language model AIs and chatbots that are already built, with dozens of others in a queue to be tested.

So the question for potential regulators is whether they can put the genie back in the bottle after large-language model AIs are deployed widely on personal computers. How can Congress tell users that their already deployed technology is suddenly illegal or at least unlicensed? Perhaps the state of Montana, which recently banned TikTok, will provide an indication of how a ban on LLM AIs might play out.

Beyond the high-level concerns about how AI is developing, the security executive in me focuses on the privacy and data-sharing implications of these LLM AIs. We’ve recently heard concerns from companies about their employees sharing trade secrets or other confidential business information with ChatGPT and similar AI tools.

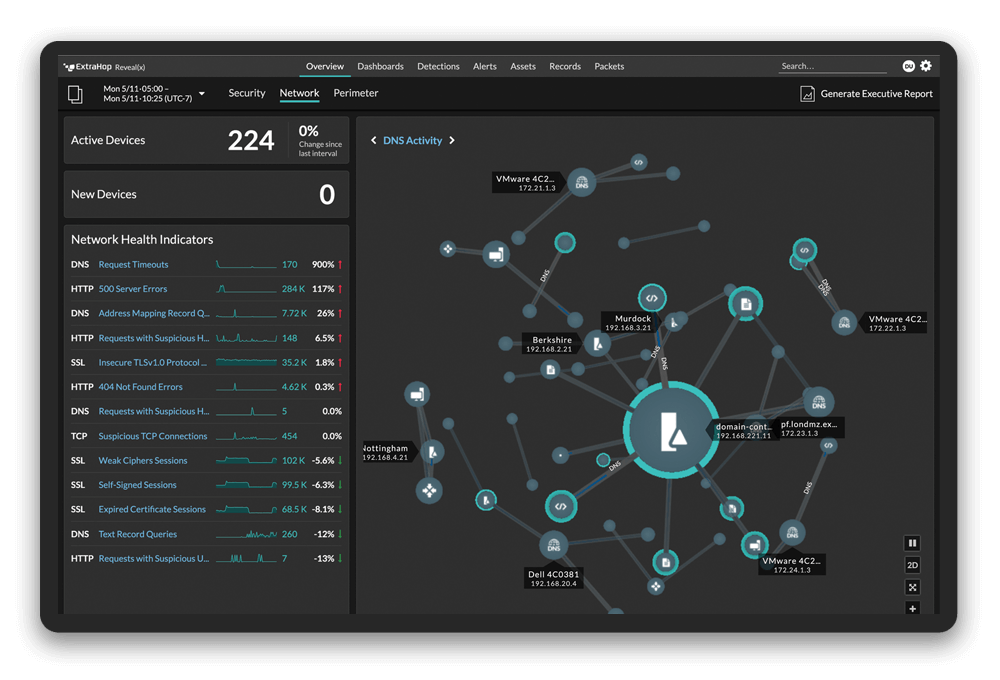

As a result, ExtraHop is taking action to help companies deal with potential AI data leakage. In mid-May, we released a new capability in our Reveal(x) network detection and response platform that provides customers with visibility into the devices and users on their networks that are connecting to OpenAI domains and the amount of data they are sharing. This visibility allows organizations to assess the risk involved with employee use of these tools and audit compliance with policies governing their use.

However, as LLM use accelerates and developers release more AI-as-a-service tools, there are more issues to think about: specifically, what happens when those models start to make decisions about what data they share with each other?

The problem is that these models lack human judgment, and the makers of these AIs have an incentive to create services that share data, as a mutually beneficial transaction that allows each of them to get smarter.

Unless developers specifically build privacy rules into these AI models, there is no one to stop LLM A from talking to LLM B. Right now, we don’t have rules to stop that from happening, and the AIs will have to trust and monitor each other. I’m not convinced that’s a good solution.

Whether we’re thinking about specific issues like data sharing, or the larger AI landscape, it’s past time to have a serious discussion about the future of LLMs.