In the months since OpenAI released ChatGPT, the tech world has been abuzz with opinions about the potential applications of this latest incarnation of artificial intelligence (AI). Much ink has been spilled trying to prophesize about where this technology will take us, from its potential to transform every industry to the possibility that it may prove to only further erode the middle class. How much is hype (or doomerism), and how much is legit?

To answer those questions, let’s first talk about what generative AI is, and how we can use it to improve security.

What is Generative AI?

Put simply, generative AI is a tool that uses a corpus of data (text, images, code, etc.) to create something new. Most generative AIs follow one of three techniques: foundation models, generative adversarial networks (GANs), and variational autoencoders (VAEs).

ChatGPT, perhaps the most talked-about AI, uses a foundation model. This type of AI is “based mainly on transformation architectures, which embody a type of deep neural network that computes a numerical representation of training data”1 to detect how different elements of a data set relate to each other. Tools like ChatGPT use this technique on language data to create natural-sounding text based on the model’s analysis of how words are typically used together.

These models don’t “understand” the inputs or outputs in the same way a human does, which is why they sometimes return text that, while grammatically and syntactically correct, is factually inaccurate or nonsensical.

Generative adversarial networks, in contrast, are based on two neural networks that work, as the name implies, in opposition. “[O]ne, called the generator, specializes in generating objects of a specific type (e.g., images of human faces or dogs, or models of molecules); the other, the discriminator, learns to evaluate them as real or fake.”2 After a few million iterations, a GAN can produce deceptively realistic results. In fact, a recent study found that artificial faces are more likely to be perceived as real than a genuine human face.

Similar to a GAN, variational autoencoders are built from two neural networks: an encoder and a decoder. The encoder creates a compressed version of an object that retains its salient characteristics. “These high-dimensional representations can then be mapped onto a two-dimensional space where similar objects are clustered. New objects are generated by decoding a point in the dimensional space, say, between two objects.”3

Though some tech pundits would have you believe AI is the silver bullet for all your enterprise woes, the techniques are still fairly specialized. It’s important to understand the advantages and limitations of each one, so you can work with the model most suited to the objectives you want to achieve. A foundation model would be a good choice to create a help desk chatbot, but a GAN would be more effective if you want to pressure test a new malware detection system.

How Can I Use Generative AI for Security?

Generative AI can do a lot more than act as an artificial conversation partner, though even in that capacity there are many applications. For instance, an AI chatbot implemented as the first line of IT support would be able to correlate multiple tickets and help security teams uncover a widespread issue that hints at a compromise more quickly than a human could alone.

Beyond natural language processing applications, generative AI can also write code and evaluate binaries or stack traces to discover software flaws. The most obvious implementation is to speed the development process by augmenting human developers, but it could also be used by red teams to come up with novel attack techniques.

GANs and VAEs can be used to generate synthetic data, which can be helpful for training other AI models, particularly in cases where an organization has insufficient real data, or where that real data contains protected information (health records, financial data, etc.) that prevents its use.

GANs also have many potential applications for security researchers and red teams, who can use them to generate synthetic biometric data which they can then use to conduct highly sophisticated pen tests. Security researchers can also use GANs iteratively the way attackers might: to create new forms of malware that can evade detection, or to reverse engineer the algorithm used in an email phishing filter, in order to better understand and counteract the way adversaries could use their tools. However, in the same way that bad actors leverage legitimate security tools like Cobalt Strike, GANs can also be turned toward malicious ends.

In IT, generative AI can be used to find the most efficient layout for components on a microchip, or to minimize latency in an organization’s network architecture.

The potential for cybersecurity is great, but generative AI is far from perfect. As noted above, foundation models can often produce results that seem correct, but prove not to be on further inspection, sometimes referred to as an “AI hallucination.” In a recent report, Gartner analysts recommend that “in order to decrease the likelihood of invalid/false output from generative AI, focus on use cases where the learning knowledge base (training dataset/corpus) is curated and has a relatively high level of accuracy.”4

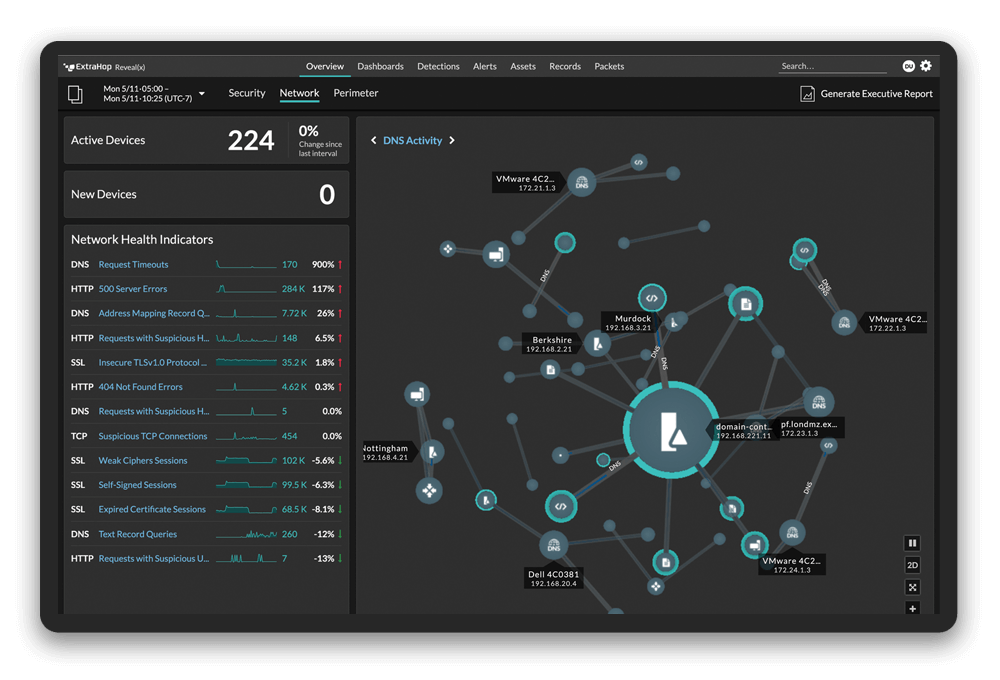

We now stand at the precipice of one of the largest potential spikes in productivity in recorded history. It would be wise to start experimenting with generative AI now so you aren’t left behind, but it also would be prudent to remain cautious in your implementation. Beyond the risk of AI hallucinations, many foundational models, like ChatGPT, retain user prompts to process other requests from different users. We at ExtraHop advise against submitting anything to public generative AI tools that you wouldn’t want others to have access to, like sensitive data or proprietary information. Many of our customers have expressed concerns about employees sharing confidential data with these tools, so we recently released a new capability in Reveal(x) that allows customers to understand which users and devices are connecting to OpenAI domains and whether proprietary data may be at risk. Read more about it here.